Doce herramientas esenciales en línea de comandos para cualquier científicx de datos

Libérate del ratón y amplía tu productividad

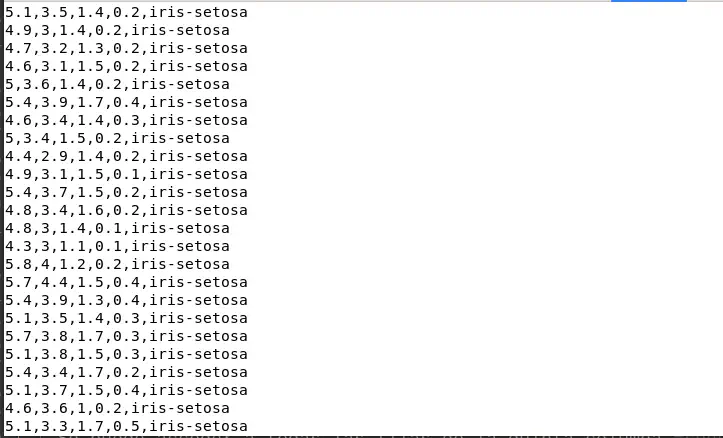

Captura de pantalla de gnome-terminal con la práctica de sed

Captura de pantalla de gnome-terminal con la práctica de sed

Este artículo se basa en “Top 12 Essential Command Line Tools for Data Scientists”, publicado originalmente en KDNuggets por Matthew Mayo (2018) y republicado por Dataquest. Lo traduzco sin literalidad en aras de una mayor comprensión.

Se considera un rápido vistazo a una docena de herramientas de línea de comandos para sistemas compatibles con el estándar POSIX que pueden ser útiles para tareas realizadas en ciencia de datos.

La lista no incluye comandos de gestión de archivos como pwd, ls, mkdir, rm o herramientas de gestión de sesiones remotas como rsh o ssh. Pero sí que son utilidades desde la perspectiva de la ciencia de datos, normalmente en inspección y procesamiento de datos. Suelen estar incluidas en los sistemas operativos compatibles con POSIX. Son ejemplos muy elementales que os anima a complementar por vuestra cuenta cuando sea necesario. En este caso no menciona los manuales tradicionales de estas herramientas sino las entradas de la Wikipedia que considera más amigables para recién llegadxs.

1. wget

wget es una herramienta para la recuperación (obtención) de archivos, normalmente de recursos remotos. Su uso básico sirve para descargar un archivo, iris.csv, un archivo de datos con el que se va a jugar en el resto de los comandos:

wget https://raw.githubusercontent.com/uiuc-cse/data-fa14/gh-pages/data/iris.csv

--2022-10-09 19:18:08-- https://raw.githubusercontent.com/uiuc-cse/data-fa14/gh-pages/data/iris.csv

Resolviendo raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.109.133, 185.199.108.133, 185.199.110.133, ...

Conectando con raw.githubusercontent.com (raw.githubusercontent.com)[185.199.109.133]:443... conectado.

Petición HTTP enviada, esperando respuesta... 200 OK

Longitud: 3716 (3,6K) [text/plain]

Grabando a: «iris.csv.22»

[ ] 0 --.-KB/s

iris.csv.22 100%[===================>] 3,63K --.-KB/s en 0s

2022-10-09 19:18:08 (15,5 MB/s) - «iris.csv.22» guardado [3716/3716]

1ls iris.csv

iris.csv

2. cat

cat es una herramienta para mostrar contenidos de archivos en la salida estándar o STDOUT y concatenar varios archivos, pudiendo combinarlos, añadirlos al final, numerar las líneas…

Una opción es ver el archivo anterior:

1cat iris.csv

sepal_length,sepal_width,petal_length,petal_width,species

5.1,3.5,1.4,0.2,setosa

4.9,3,1.4,0.2,setosa

4.7,3.2,1.3,0.2,setosa

4.6,3.1,1.5,0.2,setosa

5,3.6,1.4,0.2,setosa

5.4,3.9,1.7,0.4,setosa

4.6,3.4,1.4,0.3,setosa

5,3.4,1.5,0.2,setosa

4.4,2.9,1.4,0.2,setosa

4.9,3.1,1.5,0.1,setosa

5.4,3.7,1.5,0.2,setosa

4.8,3.4,1.6,0.2,setosa

4.8,3,1.4,0.1,setosa

4.3,3,1.1,0.1,setosa

5.8,4,1.2,0.2,setosa

5.7,4.4,1.5,0.4,setosa

5.4,3.9,1.3,0.4,setosa

5.1,3.5,1.4,0.3,setosa

5.7,3.8,1.7,0.3,setosa

5.1,3.8,1.5,0.3,setosa

5.4,3.4,1.7,0.2,setosa

5.1,3.7,1.5,0.4,setosa

4.6,3.6,1,0.2,setosa

5.1,3.3,1.7,0.5,setosa

4.8,3.4,1.9,0.2,setosa

5,3,1.6,0.2,setosa

5,3.4,1.6,0.4,setosa

5.2,3.5,1.5,0.2,setosa

5.2,3.4,1.4,0.2,setosa

4.7,3.2,1.6,0.2,setosa

4.8,3.1,1.6,0.2,setosa

5.4,3.4,1.5,0.4,setosa

5.2,4.1,1.5,0.1,setosa

5.5,4.2,1.4,0.2,setosa

4.9,3.1,1.5,0.1,setosa

5,3.2,1.2,0.2,setosa

5.5,3.5,1.3,0.2,setosa

4.9,3.1,1.5,0.1,setosa

4.4,3,1.3,0.2,setosa

5.1,3.4,1.5,0.2,setosa

5,3.5,1.3,0.3,setosa

4.5,2.3,1.3,0.3,setosa

4.4,3.2,1.3,0.2,setosa

5,3.5,1.6,0.6,setosa

5.1,3.8,1.9,0.4,setosa

4.8,3,1.4,0.3,setosa

5.1,3.8,1.6,0.2,setosa

4.6,3.2,1.4,0.2,setosa

5.3,3.7,1.5,0.2,setosa

5,3.3,1.4,0.2,setosa

7,3.2,4.7,1.4,versicolor

6.4,3.2,4.5,1.5,versicolor

6.9,3.1,4.9,1.5,versicolor

5.5,2.3,4,1.3,versicolor

6.5,2.8,4.6,1.5,versicolor

5.7,2.8,4.5,1.3,versicolor

6.3,3.3,4.7,1.6,versicolor

4.9,2.4,3.3,1,versicolor

6.6,2.9,4.6,1.3,versicolor

5.2,2.7,3.9,1.4,versicolor

5,2,3.5,1,versicolor

5.9,3,4.2,1.5,versicolor

6,2.2,4,1,versicolor

6.1,2.9,4.7,1.4,versicolor

5.6,2.9,3.6,1.3,versicolor

6.7,3.1,4.4,1.4,versicolor

5.6,3,4.5,1.5,versicolor

5.8,2.7,4.1,1,versicolor

6.2,2.2,4.5,1.5,versicolor

5.6,2.5,3.9,1.1,versicolor

5.9,3.2,4.8,1.8,versicolor

6.1,2.8,4,1.3,versicolor

6.3,2.5,4.9,1.5,versicolor

6.1,2.8,4.7,1.2,versicolor

6.4,2.9,4.3,1.3,versicolor

6.6,3,4.4,1.4,versicolor

6.8,2.8,4.8,1.4,versicolor

6.7,3,5,1.7,versicolor

6,2.9,4.5,1.5,versicolor

5.7,2.6,3.5,1,versicolor

5.5,2.4,3.8,1.1,versicolor

5.5,2.4,3.7,1,versicolor

5.8,2.7,3.9,1.2,versicolor

6,2.7,5.1,1.6,versicolor

5.4,3,4.5,1.5,versicolor

6,3.4,4.5,1.6,versicolor

6.7,3.1,4.7,1.5,versicolor

6.3,2.3,4.4,1.3,versicolor

5.6,3,4.1,1.3,versicolor

5.5,2.5,4,1.3,versicolor

5.5,2.6,4.4,1.2,versicolor

6.1,3,4.6,1.4,versicolor

5.8,2.6,4,1.2,versicolor

5,2.3,3.3,1,versicolor

5.6,2.7,4.2,1.3,versicolor

5.7,3,4.2,1.2,versicolor

5.7,2.9,4.2,1.3,versicolor

6.2,2.9,4.3,1.3,versicolor

5.1,2.5,3,1.1,versicolor

5.7,2.8,4.1,1.3,versicolor

6.3,3.3,6,2.5,virginica

5.8,2.7,5.1,1.9,virginica

7.1,3,5.9,2.1,virginica

6.3,2.9,5.6,1.8,virginica

6.5,3,5.8,2.2,virginica

7.6,3,6.6,2.1,virginica

4.9,2.5,4.5,1.7,virginica

7.3,2.9,6.3,1.8,virginica

6.7,2.5,5.8,1.8,virginica

7.2,3.6,6.1,2.5,virginica

6.5,3.2,5.1,2,virginica

6.4,2.7,5.3,1.9,virginica

6.8,3,5.5,2.1,virginica

5.7,2.5,5,2,virginica

5.8,2.8,5.1,2.4,virginica

6.4,3.2,5.3,2.3,virginica

6.5,3,5.5,1.8,virginica

7.7,3.8,6.7,2.2,virginica

7.7,2.6,6.9,2.3,virginica

6,2.2,5,1.5,virginica

6.9,3.2,5.7,2.3,virginica

5.6,2.8,4.9,2,virginica

7.7,2.8,6.7,2,virginica

6.3,2.7,4.9,1.8,virginica

6.7,3.3,5.7,2.1,virginica

7.2,3.2,6,1.8,virginica

6.2,2.8,4.8,1.8,virginica

6.1,3,4.9,1.8,virginica

6.4,2.8,5.6,2.1,virginica

7.2,3,5.8,1.6,virginica

7.4,2.8,6.1,1.9,virginica

7.9,3.8,6.4,2,virginica

6.4,2.8,5.6,2.2,virginica

6.3,2.8,5.1,1.5,virginica

6.1,2.6,5.6,1.4,virginica

7.7,3,6.1,2.3,virginica

6.3,3.4,5.6,2.4,virginica

6.4,3.1,5.5,1.8,virginica

6,3,4.8,1.8,virginica

6.9,3.1,5.4,2.1,virginica

6.7,3.1,5.6,2.4,virginica

6.9,3.1,5.1,2.3,virginica

5.8,2.7,5.1,1.9,virginica

6.8,3.2,5.9,2.3,virginica

6.7,3.3,5.7,2.5,virginica

6.7,3,5.2,2.3,virginica

6.3,2.5,5,1.9,virginica

6.5,3,5.2,2,virginica

6.2,3.4,5.4,2.3,virginica

5.9,3,5.1,1.8,virginica

3. wc

wc viene de word counts y sirve para contar palabras, líneas o bytes de archivos de texto. Sin argumentos muestra el número de líneas, palabras, caracteres y el nombre del archivo.

wc iris.csv

151 151 3716 iris.csv

Luego el archivo iris tiene:

- 151 líneas

- 3716 palabras

4. head

head muestra las primeras diez líneas de un archivo si no se especifican otras opciones. Se puede cambiar el número con la opción -n.

head -n 5 iris.csv

sepal_length,sepal_width,petal_length,petal_width,species

5.1,3.5,1.4,0.2,setosa

4.9,3,1.4,0.2,setosa

4.7,3.2,1.3,0.2,setosa

4.6,3.1,1.5,0.2,setosa

5. tail

Y para ver la cola del archivo se utiliza tail:

tail -n 5 iris.csv

6.7,3,5.2,2.3,virginica

6.3,2.5,5,1.9,virginica

6.5,3,5.2,2,virginica

6.2,3.4,5.4,2.3,virginica

5.9,3,5.1,1.8,virginica

6. find

find sirve para buscar y encontrar archivos concretos. Primero se pone la ruta, luego el nombre y luego el tipo.

Por ejemplo, para buscar iris.csv en el directorio actual de trabajo se hace:

find . -name 'iris.csv' -type f

./iris.csv

Si en vez de en el directorio actual de trabajo se quisiera en el directorio del usuario:

find ~/ -name 'iris.csv' -type f

7. cut

cut se utiliza para cortar secciones de una línea de un archivo de texto. Si son archivos *sv será más cómodo.

Las dos opciones más usadas o básicas son:

-d, donde se indica a continuación entre comillas simples el delimitador de campos.-f, donde se indica el número de columna sobre el que operar.

Así, si se ve la primera fila del archivo iris.csv:

head -1 iris.csv

sepal_length,sepal_width,petal_length,petal_width,species

Se puede acceder a todas las filas de la quinta columna species especificando el delimitador -d ',' con la opción -f 5. Para no ver todo entubamos el resultado al comando head y vemos las 5 primeras líneas:

cut -d ',' -f 5 iris.csv | head -n 5

species

setosa

setosa

setosa

setosa

8. uniq

uniq modifica la salida de los archivos ocultando las líneas consecutivas idénticas en una sola. Como otros comandos, combinado es como se expresa con más potencia.

Por ejemplo, se puede saber cuántos valores únicos tiene la quinta columna con la expresión anterior entubada hacia uniq con la opción -c de count.

cut -d ',' -f 5 iris.csv | uniq -c

1 species

50 setosa

50 versicolor

50 virginica

9. awk

awk no es solo un comando sino un lenguaje de programación independiente que sirve para procesar y extraer texto. Puede ser utilizado como si fuera un comando desde la terminal.

Un uso simple sería buscar la cadena de caracteres /setosa/ en el archivo iris.csv e imprimir todas las líneas donde se ha encontrado con la función {print} y la variable $0.

De nuevo, para no mostrar todas las líneas, se entuba la salida a head y se muestran las cinco primeras:

awk '/setosa/ { print $0 }' iris.csv | head -n 5

5.1,3.5,1.4,0.2,setosa

4.9,3,1.4,0.2,setosa

4.7,3.2,1.3,0.2,setosa

4.6,3.1,1.5,0.2,setosa

5,3.6,1.4,0.2,setosa

10. grep

grep es otra herramienta para procesar texto que significa “global regular expression print”, impresión de expresiones regulares globales. Por su facilidad para escribir sentencia, procesar cadenas y mostrar resultados es una de las que más se usan habitualmente. La búsqueda anterior de setosa sería:

grep setosa iris.csv | head -n 5

5.1,3.5,1.4,0.2,setosa

4.9,3,1.4,0.2,setosa

4.7,3.2,1.3,0.2,setosa

4.6,3.1,1.5,0.2,setosa

5,3.6,1.4,0.2,setosa

11. sed

sed es un “editor de flujo” (de *s*/tream/ *ed*/itor/ y heredero del editor ed), una herramienta de procesamiento y transformación de textos.

En este ejemplo se procesa la cadena setosa para convertirla en iris-setosa:

sed 's/setosa/iris-setosa/g' iris.csv > iris-setosa.csv

head -n 5 iris-setosa.csv

sepal_length,sepal_width,petal_length,petal_width,species

5.1,3.5,1.4,0.2,iris-setosa

4.9,3,1.4,0.2,iris-setosa

4.7,3.2,1.3,0.2,iris-setosa

4.6,3.1,1.5,0.2,iris-setosa

12. history

history es muy sencillo y útil. Este comando almacena cada línea que ejecutamos de tal manera que se puede consultar o reutilizar de múltiples formas como una búsqueda recursiva.

Entubado a head se muestran las últimas 5 líneas:

history | head -n 5

1 cut -d ',' -f 5 iris.csv | head -n 5

2 awk '/setosa/ { print $0 }' iris.csv | head -n 5

3 grep setosa iris.csv | head -n 5

4 sed 's/setosa/iris-setosa/g' iris.csv > iris-setosa.csv

5 head -n 5 iris-setosa.csv

Conclusión

Estos doce comandos se dan en el módulo de Fundamentos tecnológicos pero, como decía el artículo original, son solo una muestra de lo que es posible hacer en ciencia de datos desde la línea de comandos.

Finaliza el artículo con un lema que hemos incorporado al título:

¡Libérate del ratón y mira cómo crece tu productividad!